This article explains how to perform deformable image registration using deep neural networks.

What is Image Registration?

Image registration is the process of transforming different sets of data into the same coordinate system. The spatial relationships between these images can be rigid (translations and rotations), affine (shears for example), homographies, or complex deformable models.

For rigid image registration, I recommend that you read the article written by J. Joslove and E. Kamoun. In the following, let us focus on deformable image registration, which is the most general use case.

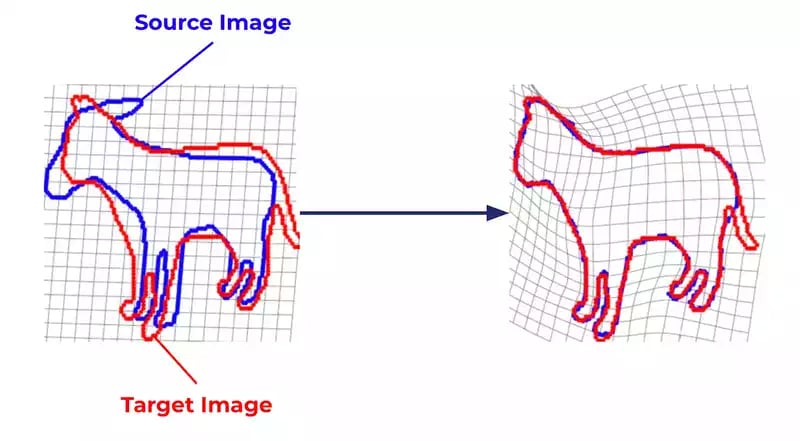

Deformable registration of a source image to a target image is finding a pixel-wise displacement field such that, when applying it to the source image, it matches the target image.

How can I do deformable image registration?

There are several ways to do deformable image registration: optimal transport, MRFs, and other optimization-based techniques. In this article, I would like to describe the most recent technique and, surely, the simplest to implement: The Deep Learning-based one.

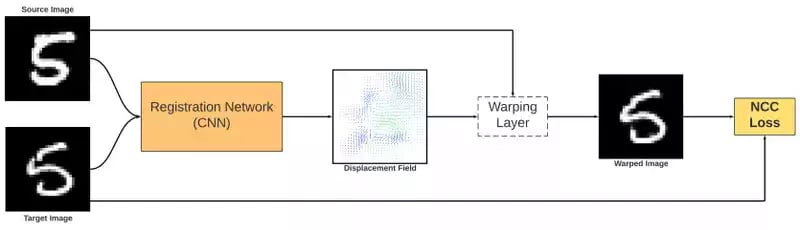

Here is a schema representation of the training process of a deep learning-based pipeline for deformable image registration:

Step 1: Outputting the Displacement Field

Give a (Source, Target) pair of images to the registration network - which consists of a CNN (Convolutional Neural Network), often a UNet.

This CNN takes the pair of images as input and outputs a Displacement Field. The displacement field is simply a tensor that maps every single pixel (x, y) in the source image to a displacement vector (Δx, Δy).

Step 2: Computing the Warped Image

Once you have the displacement field, you can apply it to the Source image.

For that, for each pixel in the source image, you compute the target pixel (x’, y’) = (x+Δx, y+Δy). Then, you sample the source image with the target coordinates to create the warped image. This step is done in the Warping layer.

Step 3: Computing Similarity Warped ↔ Target

Now, we have to measure how good our registration is in order to give the network an optimization goal (a Loss Function).

There are 2 main functions that can measure the similarity between images:

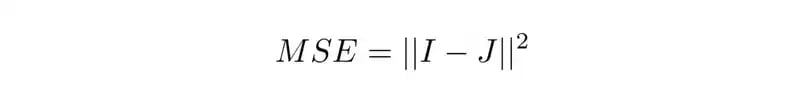

- The MSE (Mean Squared Error) Loss:

The MSE is a measure of the similarity of two images in a pixel-wise way. If the two images are strictly the same, the MSE between the two images will be equal to 0.

The MSE between two images I and J is defined by:

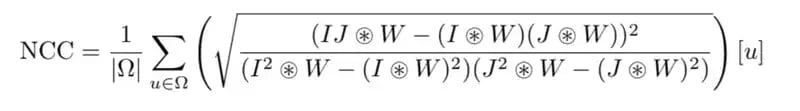

- The NCC (Normalized Cross-Correlation) Loss:

The NCC is a measure of the similarity of two series as a function of the displacement of one relative to the other. It is similar in nature to the convolution of two functions.

If W is a constant kernel of size K, the NCC between two images I and J is defined by:

Step 4: Updating the weights

Once the similarity is computed, you can use backpropagation to update the weights of the CNN.

Finally, loop over your dataset to train the deformable image registration network.

Going Further

This article presents the key principles of deformable registration. I hope you enjoyed it! Image registration is a wide field that has led to the writing of several research papers.

If you want to go further in understanding and mastering deformable image registration, these links may be useful:

- An implementation of a deep deformable registration network (VoxelMorph)

- A tutorial on Google Colab about VoxelMorph

- An article about optimal transport for Diffeomorphic Registration

If you are you looking for Image Recognition Experts, don't hesitate to contact us!

Thanks to Etienne Bennequin and Bastien Ponchon.